Serverless GraphQL API

Say you're building an app. It needs data from a server. What do you do?

You make a fetch() request.

fetch("https://swapi.dev/api/people/1/").then((res) => res.json()).then(console.log)

And you get eeeevery piece of info about Luke Skywalker.

{"name": "Luke Skywalker","height": "172","mass": "77","hair_color": "blond","skin_color": "fair","eye_color": "blue","birth_year": "19BBY","gender": "male","homeworld": "https://swapi.dev/api/planets/1/","films": ["https://swapi.dev/api/films/2/","https://swapi.dev/api/films/6/","https://swapi.dev/api/films/3/","https://swapi.dev/api/films/1/","https://swapi.dev/api/films/7/"],"species": ["https://swapi.dev/api/species/1/"],"vehicles": ["https://swapi.dev/api/vehicles/14/","https://swapi.dev/api/vehicles/30/"],"starships": ["https://swapi.dev/api/starships/12/","https://swapi.dev/api/starships/22/"],"created": "2014-12-09T13:50:51.644000Z","edited": "2014-12-20T21:17:56.891000Z","url": "https://swapi.dev/api/people/1/"}

Frustrating ... all you wanted was his name and hair color. Why's the API sending you all this crap? 🤦♂️

And what's this about Luke's species being 1? What the heck is 1?

You make another fetch request.

fetch("https://swapi.dev/api/species/1/").then((res) => res.json()).then(console.log)

You get data about humans. Great.

{"name": "Human","classification": "mammal","designation": "sentient","average_height": "180","skin_colors": "caucasian, black, asian, hispanic","hair_colors": "blonde, brown, black, red","eye_colors": "brown, blue, green, hazel, grey, amber","average_lifespan": "120","homeworld": "https://swapi.dev/api/planets/9/","language": "Galactic Basic","people": ["https://swapi.dev/api/people/1/","https://swapi.dev/api/people/4/","https://swapi.dev/api/people/5/","https://swapi.dev/api/people/6/","https://swapi.dev/api/people/7/","https://swapi.dev/api/people/9/","https://swapi.dev/api/people/10/","https://swapi.dev/api/people/11/","https://swapi.dev/api/people/12/","https://swapi.dev/api/people/14/","https://swapi.dev/api/people/18/","https://swapi.dev/api/people/19/","https://swapi.dev/api/people/21/","https://swapi.dev/api/people/22/","https://swapi.dev/api/people/25/","https://swapi.dev/api/people/26/","https://swapi.dev/api/people/28/","https://swapi.dev/api/people/29/","https://swapi.dev/api/people/32/","https://swapi.dev/api/people/34/","https://swapi.dev/api/people/43/","https://swapi.dev/api/people/51/","https://swapi.dev/api/people/60/","https://swapi.dev/api/people/61/","https://swapi.dev/api/people/62/","https://swapi.dev/api/people/66/","https://swapi.dev/api/people/67/","https://swapi.dev/api/people/68/","https://swapi.dev/api/people/69/","https://swapi.dev/api/people/74/","https://swapi.dev/api/people/81/","https://swapi.dev/api/people/84/","https://swapi.dev/api/people/85/","https://swapi.dev/api/people/86/","https://swapi.dev/api/people/35/"],"films": ["https://swapi.dev/api/films/2/","https://swapi.dev/api/films/7/","https://swapi.dev/api/films/5/","https://swapi.dev/api/films/4/","https://swapi.dev/api/films/6/","https://swapi.dev/api/films/3/","https://swapi.dev/api/films/1/"],"created": "2014-12-10T13:52:11.567000Z","edited": "2015-04-17T06:59:55.850671Z","url": "https://swapi.dev/api/species/1/"}

That's a lot of JSON to get the word "Human" out of the Star Wars API ...

What about Luke's starships? There's 2 and that means 2 more API requests ...

fetch("https://swapi.dev/api/starships/12/").then((res) => res.json()).then(console.log)fetch("https://swapi.dev/api/starships/22/").then((res) => res.json()).then(console.log)

Wanna know how much JSON that dumps? Try a guess. 🙄

You made 4 API requests and transferred a bunch of data to find out that Luke Skywalker is human, has blond hair, and flies an X-Wing and an Imperial Shuttle.

And guess what, you didn't cache anything. How often do you think this data changes? Once a year? Twice?

🤦♂️

GraphQL to the rescue

Here's what the same process looks like with GraphQL.

query luke {// id found through allPeople queryperson(id: "cGVvcGxlOjE=") {namehairColorspecies {name}starshipConnection {starships {name}}}}

And the API returns what you wanted with 1 request.

{"data": {"person": {"name": "Luke Skywalker","hairColor": "blond","species": null,"starshipConnection": {"starships": [{"name": "X-wing"},{"name": "Imperial shuttle"}]}}}}

An API mechanism that gives you flexibility on the frontend, slashes API requests, and doesn't transfer data you don't need?

😲

Write a query, say what you want, send to an endpoint, GraphQL figures out the rest. Want different params? Just say so. Want multiple models? Got it. Wanna go deep? You can.

Without changes on the server. Within reason.

GraphQL client libraries can add caching to avoid duplicate requests. And because queries are structured, clients can merge queries into 1 API call.

I fell in love the moment it clicked.

You can try it on graphql.org's public playground

What is GraphQL

GraphQL is an open-source data query and manipulation language for APIs and a runtime for fulfilling queries with existing data.

Touted as a replacement for REST, GraphQL is actually a different approach to APIs. Better for common use-cases and with its own drawbacks.

Don't let the propaganda fool you 👉 you can and should use both REST and GraphQL. Depends on what you're doing.

GraphQL's benefit is its declarative nature.

On the client, you describe the shape of what you want and GraphQL figures it out. On the server, you write resolver functions for sub-queries and GraphQL combines them into the full result.

REST queries can be declarative on the client, while GraphQL is declarative on both ends. For reads and writes.

GraphQL queries

GraphQL queries fetch data. Following this pattern:

query {what_you_want {its_property}}

You nest fields – representing your models – and properties into queries to describe what you're looking for. You can go as deep as you want.

Write fields side-by-side to execute multiple queries with a single API request.

query {what_you_want {its_property}other_thing_you_want {its_property {property_of_property}}}

This is where the power and flexibility come from.

Variables let you create dynamic queries and build complex filters to limit the scope of your result.

query queryName($filterProp: "value") {field(filterProp: $filterProp) {property1property3}}

$filterProp defines a new variable that the field() lookup uses to filter results for those matching "value".

GraphQL comes with basic equality filters built-in and you're encouraged to add more in your resolvers. Typical projects choose to support sorting, greater-than, not-equals, etc.

GraphQL mutations

GraphQL mutations write data. Following this pattern:

mutation {what_youre_updating(argument: "value", argument2: "value 2") {prop_you_want_back}}

Like a query with arguments, you specify what you're updating and pass your values. What's available depends on how mutation resolvers are implemented on the server.

The mutation body specifies what you'd like returned. Same as a query.

Like queries, you can put mutations side-by-side and use variables.

mutation mutationName($argument: "value") {what_youre_updating(argument: $argument) {prop_you_want_back}other_field(argument: $argument) {return_prop}}

GraphQL vs. REST, which to use and when

The GraphQL vs. REST debate is a false dichotomy.

People like to say GraphQL is replacing REST and that's not the case. GraphQL is augmenting REST.

Will GraphQL become the default API layer? It might.

Should you rip out your REST API and rewrite for GraphQL? Please don't.

GraphQL doesn't care where data comes from. Relational database, NoSQL database, files, a REST API, another GraphQL API ... All you need is a function that reads data in response to a query.

You can start using GraphQL without changing your existing server with a GraphQL middle layer. Create a GraphQL server on top of your REST API.

How do you choose between REST and GraphQL?

When building a typical CRUD – Create, Read, Update, Delete – API, I like to ask 6 questions:

- Is this a new project? Default to GraphQL

- Do I know in advance what clients are going to need? REST is great

- Am I fetching small subsets of large data? GraphQL

- Am I generating new values for each request? REST

- Updating a few properties on an object? GraphQL

- Submitting large payloads to be saved? REST

Use upserts to deal with Create and Update. Avoid deletes.

Should you expose your entire data model verbatim?

No.

Exposing your full data model is a common mistake in API design.

Define a clean API using domain driven design. How your backend stores data for max performance and good database design differs from how clients think about that data.

An "access to everything" API style is hard to use, leads to security issues, is difficult to maintain, and tightly couples servers and clients. You'll have to update both server and client for any DB change.

Then there's the question of derived and computed properties with complex business logic. You don't want to re-implement those on the client using raw data from your database.

How to create a serverless GraphQL server

There's many ways to build a GraphQL server, the nicest I've found is with Apollo's apollo-server-lambda package.

You follow a 4 step process:

- Add lambda to your

serverless.ymlfile - Initialize the Apollo server

- Specify your schema

- Add resolvers

We created a small REST API in the previous chapter, let's recreate it with GraphQL. Full code on GitHub

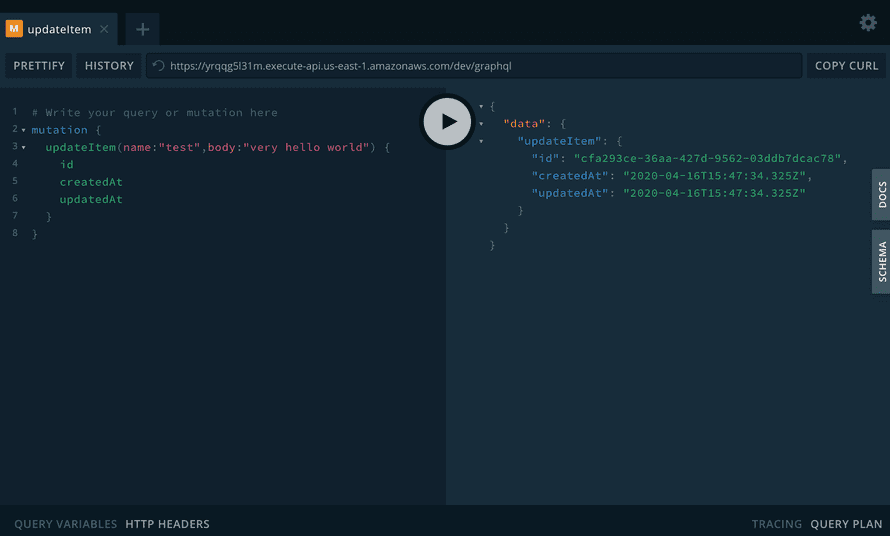

Apollo creates a GraphQL playground for us. You can try my implementation here:

Change the URL inside the playground to: https://yrqqg5l31m.execute-api.us-east-1.amazonaws.com/dev/graphql. Apollo drops the /dev/ part.

serverless.yml

We define a new function in the functions: section.

# serverless.ymlfunctions:graphql:handler: dist/graphql.handlerevents:- http:path: graphqlmethod: GETcors: true- http:path: graphqlmethod: POSTcors: true

The convention is to use the /graphql endpoint for everything.

Make sure to define both GET and POST endpoints. GET serves the Apollo playground, POST handles the queries and mutations.

initialize Apollo server

Every Apollo server needs a schema, a resolvers object, and the initialized server. Like this:

// src/graphql.tsimport { ApolloServer, gql } from "apollo-server-lambda"// this is where we define the shape of our APIconst schema = gql``// this is where the shape maps to functionsconst resolvers = {Query: {},Mutation: {},}const server = new ApolloServer({ typeDefs: schema, resolvers })export const handler = server.createHandler({cors: {origin: "*", // for security in production, lock this to your real URLscredentials: true,},})

This server won't run because the schema and resolvers don't match. Resolvers have fields that the schema does not.

We'll add type definitions to the schema and a resolver for each Query and Mutation.

specify your schema

Your schema defines the shape of your API. Every type of object your server returns and every query and mutation definition.

You get a type-safe API because GraphQL ensures objects follow this schema. Both when coming into the API and when flying out.

To mimic our CRUD API from before, we use a schema like this:

// src/graphql.tsconst schema = gql`type Item {id: Stringname: Stringbody: StringcreatedAt: StringupdatedAt: String}type Query {item(id: String!): Item}type Mutation {updateItem(id: String, name: String, body: String): ItemdeleteItem(id: String!): Item}`

With REST, users could store and retrieve arbitrary JSON blobs. GraphQL doesn't support that. Instead, we define an Item type with an arbitrary body string.

You can use that to store serialized JSON objects.

Each item will have an id, a name, and a few timestamps managed by the server. Those help with debugging.

Our item() query lets you retrieve items and requires an id to work. That's the exclamation point after String.

Then we have a mutation for upserting items and a mutation for deleting.

create your resolvers

We have 1 query and 2 mutations. That means we'll need 3 resolver functions.

We can copy these from the REST implementation and change how we get arguments.

I like to put queries in a src/queries.ts file and mutations in a src/mutations.ts. You can organize this by model as your implementation grows.

item()

The item() query is a basic fetch and looks like this:

// src/queries.tsimport * as db from "simple-dynamodb"function remapProps(item: any) {return {...item,id: item.itemId,name: item.itemName,}}// fetch using item(id: String)export const item = async (_: any, args: { id: string }) => {const item = await db.getItem({TableName: process.env.ITEM_TABLE!,Key: {itemId: args.id,},})return remapProps(item.Item)}

We can ignore Apollo's first resolver argument. The second argument is where query and mutation values come in.

Since id is a required param, you don't have to check for nulls. GraphQL handles that before calling your resolver.

You can call getItem on DynamoDB, or run a SQL query for a relational database, and return the result. GraphQL handles the rest.

One niggle to note 👉 DynamoDB considers name and id reserved attributes. We remap them to itemName and itemId when saving and have to map them back when reading.

This is what I meant by "You don't want to expose raw database details to your API" :)

updateItem()

The updateItem() mutation is our upsert.

It creates a new item when you don't send an id and updates the existing item when you do. If no item is found, the mutation throws an error.

And it handles createdAt and updatedAt timestamps.

// src/mutations.tstype ItemArgs = {id: stringname: stringbody: string}// upsert an item// item(name, ...) or item(id, name, ...)export const updateItem = async (_: any, args: ItemArgs) => {let itemId = args.id ? args.id : uuidv4()let createdAt = new Date().toISOString()// find item if existsif (args.id) {const find = await db.getItem({TableName: process.env.ITEM_TABLE!,Key: { itemId },})if (find.Item) {// save createdAt so we don't overwrite on updatecreatedAt = find.Item.createdAt} else {throw "Item not found"}}const updateValues = {itemName: args.name,body: args.body,}const item = await db.updateItem({TableName: process.env.ITEM_TABLE!,Key: { itemId },UpdateExpression: `SET ${db.buildExpression(updateValues)}, createdAt = :createdAt, updatedAt = :updatedAt`,ExpressionAttributeValues: {...db.buildAttributes(updateValues),":createdAt": createdAt,":updatedAt": new Date().toISOString(),},ReturnValues: "ALL_NEW",})return remapProps(item.Attributes)}

We take the id from arguments or create a new one with uuidv4().

Then we try to find the item.

If found, we change createdAt to the existing value. Otherwise we throw an error. That helps you avoid creating new items by accident because DynamoDB always upserts.

We define the updateValues and build a DynamoDB UPDATE query. This can look gnarly no matter what DB you use.

In the end, we return the object our database returned and let GraphQL handle the rest.

deleteItem()

The deleteItem() mutation is simple. GraphQL and the database do the work for us.

// src/mutations.tsexport const deleteItem = async (_: any, args: { id: string }) => {// DynamoDB handles deleting already deleted objects, no error :)const item = await db.deleteItem({TableName: process.env.ITEM_TABLE!,Key: {itemId: args.id,},ReturnValues: "ALL_OLD",})return remapProps(item.Attributes)}

We take the id from mutation arguments, ask our database to delete the row, and return the old attributes.

add resolvers to the GraphQL server

We built the resolvers, now we add them to graphql.ts:

// src/graphql.tsimport { item } from "./queries"import { updateItem, deleteItem } from "./mutations"// ...const resolvers = {Query: {item,},Mutation: {updateItem,deleteItem,},}

This tells Apollo how to map queries and mutations to resolvers. We can use generic names because this is a small project.

You'll want to namespace in bigger apps.

Try it out

Run yarn deploy and you get a GraphQL server. There's even an Apollo playground that helps you test.

Make sure you change the URL inside the playground to: https://yrqqg5l31m.execute-api.us-east-1.amazonaws.com/dev/graphql. Apollo drops the /dev/ part.

GraphQL no-code services

Wiring up GraphQL can get tedious with lots of repetitive code.

Various no-code and low-code solutions exist that do the work for you. Look at your database and magic a GraphQL server into existence.

They tend to exhibit the expose entire DB as API problem. But they can be a good starting point.

Next chapter we look at building lambda pipelines for distributed data processing.